It’s hard not to draw a direct line between OpenAI and artificial intelligence. The startup’s ground-breaking invention, ChatGPT, is already tightly intertwined with so many areas of human life, even though it’s been around for less than two years.

Naturally, OpenAI and GPT, their AI model that’s getting progressively more advanced, make up a thick thread of the tech narrative. But before GPT made it into history, how did its story begin?

In this article, we’re going to uncover where the roots of the technology are coming from, explore its evolution, and more. If you wanna find out all about that, buckle up, because we’re starting the engine.

written by:

Alexey Sliborsky

Solution Architect

Contents

The Place of Google in the AI Race

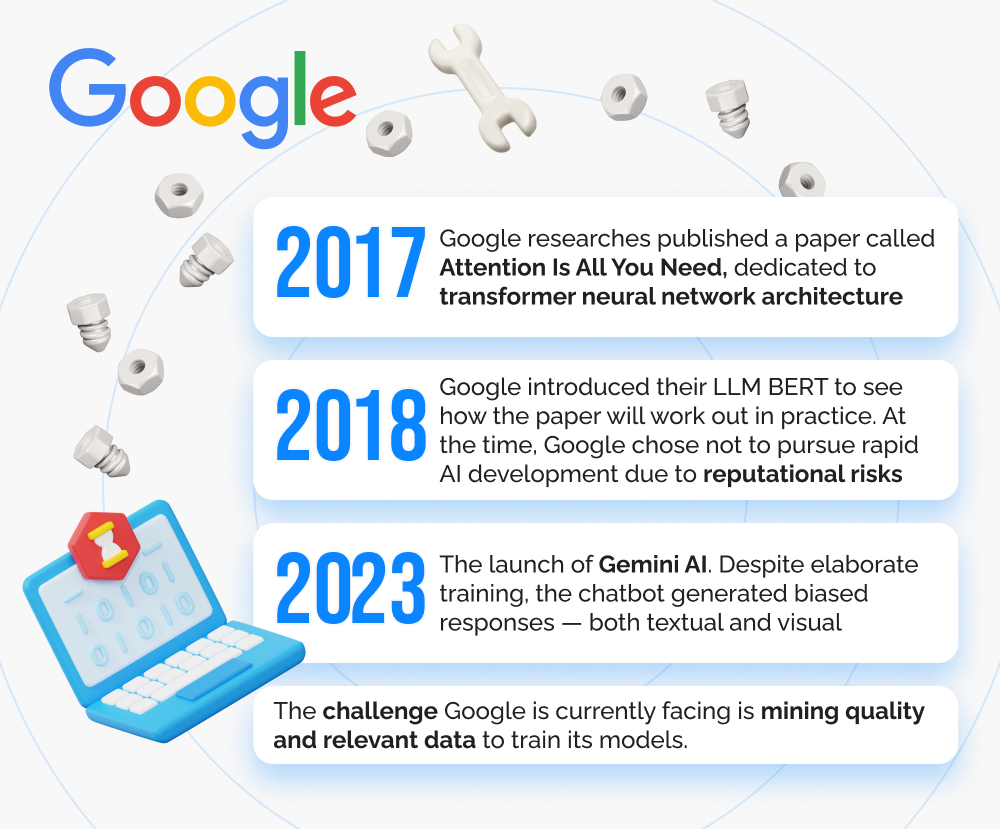

When we think of AI, Google is not the first company name that comes to mind, even though they were the ones who built the teleport to the brand-new era of artificial intelligence. After pioneering the field, the giant that dominates the search industry with over 90% market share is somewhat overshadowed in the race. Considering Google’s engineering talent and years of profound AI research, why didn’t they snatch the leading position?

Putting the “T” in GPT

In 2017, eight Google researches published a paper called “Attention is all you need”. The paper was dedicated to transformers, or a transformer neural network architecture. This technological breakthrough was going to rebuild the world of natural language processing (NLP) and invent AI as we know it. What exactly set it apart from its predecessors and made it so special?

Models that walked the path of innovation prior to transformers were called recurrent neural networks, or RNNs, and had trouble with long-term dependencies due to issues like vanishing gradients. Advanced RNNs like Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU) were developed to alleviate them. Even though they appeared to understand context better than basic RNNs, they were not as effective as transformers. Transformers had the capability to recognize the context of words in a long sequence and generate contextually relevant responses.

In 2018, Google introduced their large language model (LLM) BERT to see how the paper will work out in practice. BERT helped the worldwide AI community realize the potential of LLMs and see how drastically different the development of the AI field is going to be. Yet, despite everyone’s expectations, the company didn’t pursue the AI big vision any further. This decision led Google to miss their opportunity to dominate the market while it was unexplored and uninhabited. And what Google didn’t do, OpenAI did. OpenAI leveraged the transformers architecture that the search giant introduced and launched their very own AI model, their first GPT (generative pre-trained transformer). That move by OpenAI resulted in a tectonic shift in the AI industry, and the rest is history that we will talk about later in the article.

Still, the question remains. Why did Google hesitate to launch a product after that transformers paper, considering that their oversight of the research in 2017 cost them $6.2 billion?

Why Didn’t Google Reap What It Sowed?

The two researchers who co-created the transformer paper, Daniel De Freitas and Noam Shazeer, communicated to their colleagues just how potent the technology is. But Google executives refused their idea to build a public demo or integrate it into the Google Assistant virtual helper. The reason for that was Google had the name to uphold, and LLMs didn’t match its standards of safety and fairness for AI systems. As Sergey Brin, co-founder of Google, put it, this hesitance derived from the company’s fear to make mistakes and cause embarrassment. Reputational risks are the reason Google didn’t make a well-timed move in spite of holding enormous resources on its hands.

While Google was hesitating, Microsoft backed OpenAI with the launch of ChatGPT and integrated its elements into the Bing search engine. That can add 1% of market share to the company. But, according to the source, “the way Microsoft sees it, just gaining another 1% of market share could give the company another $2 billion in ad revenue”.

The Current State of Google’s Mind

It seems that Google’s past indecisiveness is still keeping the tech giant away from being a confident player of the AI game. Their most recent efforts to gain speed in the race of AI developments by launching Gemini AI, a text and image generation tool, led to a major controversy on the subject of diversity. In pursuit of eliminating racism and sexism from the tool’s outputs, Google was challenged with historical inaccuracy. E.g., when asked to generate the images of America’s founding fathers, Gemini AI produced pictures of women and people of color. The training of the bot missed the mark, which unfortunately is not the first time it happened.

Everybody knows that the results you get from an AI tool are only as good as the data you feed it. In order to train new models on top-of-the-line information, you have to already be able to distinguish what was generated by a person and what was generated by a neural network. That requires access to content that was created by humans only.

According to Forbes, the corporation failed to invest in content creation on the open web in the 2000s to leverage it now for answers to user queries. The open web lost popularity, and social media networks stepped into the limelight. These days, they shape the information we have on the Internet and affect the relevancy of data that later is served to users via AI tools. Google doesn’t have direct access to these networks. As a result, the company is challenged with mining quality and relevant data, and that is a serious struggle that the tech giant is yet to overcome.

By the way, if you’re looking for a reliable tech partner to create AI solutions with, let us offer you our candidacy. We have great expertise in the field. We are open for a free consultation, so if you’re interested, let’s schedule a meeting.

OpenAI and the Evolution of GPT: How It All Began, and How Far the Company Has Come

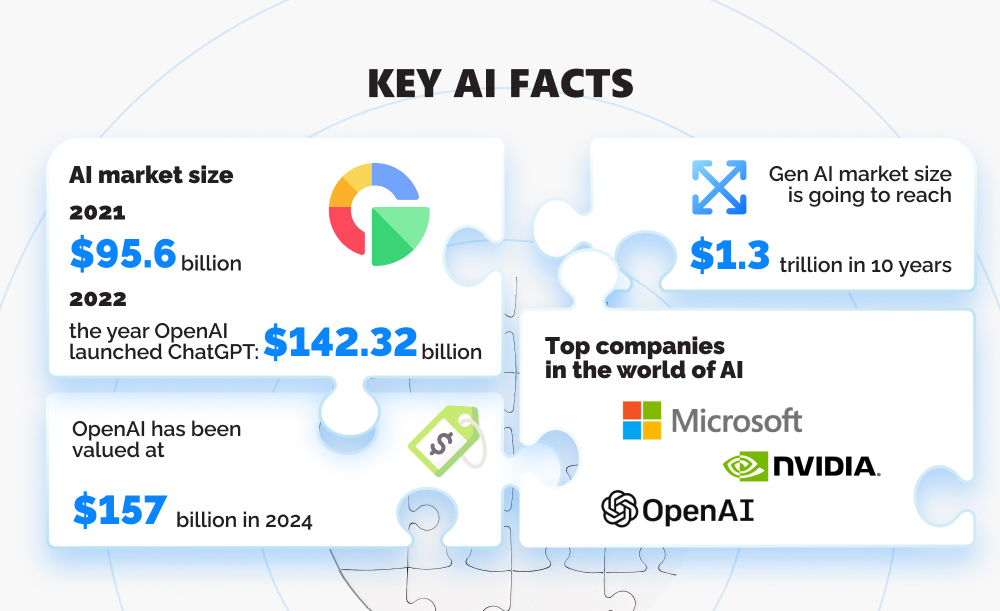

According to the source, in 2021, the AI market size was valued at $95.6 billion. In 2022, this figure skyrocketed to $142.32 billion. It’s difficult to imagine that surge without OpenAI launching their ChatGPT platform in November of the same year. Today, together with Microsoft and NVIDIA, OpenAI dominates the world of generative artificial intelligence. As Bloomberg reports, the gen AI market is going to grow at a CAGR of 42% over the next 10 years, which will result in the market size of $1.3 trillion. By the way, OpenAI alone is currently valued at $157 billion (Bloomberg). As Fortune writes, this figure “would vault its market cap over companies like Ford, Target, and American Express.”

How did OpenAI ascend to the level of AI dominance? How did things start, and where is the company now? We offer you a trip down memory lane.

GPT-1

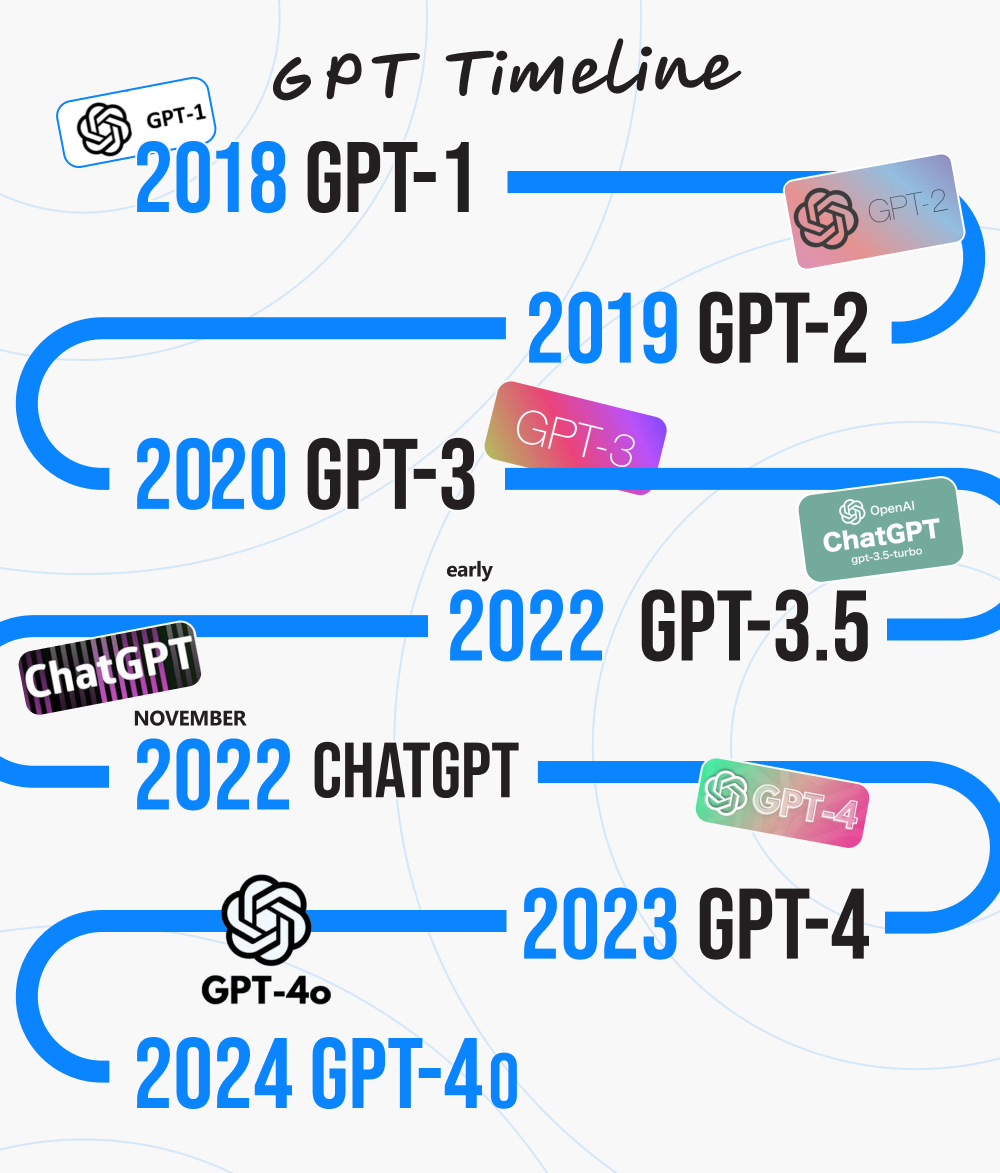

In 2018 OpenAI introduced the first GPT model. This GPT, or generative pre-trained transformer, will later be known as GPT-1. It signified the launch of advanced AI models that derive from the 2017 transformer invented by Google.

GPT-1 had about 117 million parameters. It applied unsupervised learning (i.e., machine learning models learn from unlabeled data with no pre-existing labels or categories defined by “supervisors”) and demonstrated exceptional results in text generation. The achievements included solid attempts to create and rephrase short texts with a human resembling quality, provide answers to generic queries, and translate languages.

Still, it's GPT-1. It had a long road ahead of it as well as a few serious limitations. It didn't do well when it came to larger language structures, and the content was full of inaccuracies. The model also lacked comprehension of the subject on a deeper level.

GPT-2

In 2019, OpenAI introduced GPT-2. The model had nearly 1.5 billion parameters. One of the achievements was that it could handle input twice the size of what GPT-1 was capable of, which translates as processing larger text samples effectively. On top of that, GPT-2 was trained on 8 million web pages. That equals more diverse content that sounded even more human-like.

The model produced short stories, answers to simple questions, things like poems, news articles (essentially, multi-paragraph text), and summarized larger text pieces. Just like GPT-1, GPT-2 was to learn to predict the next word in a sentence based on previous ones. But GPT-2 used techniques like mixed precision training, gradient checkpointing, and dynamic batch sizing, which allowed it to handle larger datasets. The model also underwent modified objective training to recognize verbs, nouns, subjects and objects in a sentence. Due to that, it could generate more coherent and relevant outputs. It became clear that this AI-powered technology has the potential to move beyond content creation and tackle fields like customer service.

As to limitations, in the same way as GPT-1, GPT-2 struggled with larger texts. And naturally, the mix of diverse content it consumed alongside the unresolved issue of GPT-2's inaccuracy caused a major controversy. Some of the model's outputs were biased and offensive, as there is a great deal of inappropriate content on the Internet. Additionally, there was a risk that its text generation functionality could lead to misuse of deceptive content. Due to these ethical concerns, OpenAI limited the release of GPT-2 to mitigate potential risks. The company implemented filtering mechanisms and moderation systems to deal with harmful or inappropriate content.

GPT-3

June 2020 was the beginning of a new era for GPT models. Firstly, GPT-3 was trained on 570+ GB of text data, including sources like BookCorpus, Wikipedia, etc. Secondly, it had 175 billion parameters. The model could perform tasks that were way out of reach for its predecessors.

The greatest achievements of GPT-3 were linked to its accessibility to ordinary users and supreme conversational skills. For the first time ever, it was possible to interact with an LLM directly and confuse AI with a real person. Also, unlike GPT-2, GPT-3 could perform few-shot learning, i.e., it was able to comprehend a request with just a handful of examples.

Let us walk you through the model's new and reinvented talents:

- GPT-3 improved its translation capabilities.

- The model showcased impressive generative AI capabilities. Now people could apply them for both professional and personal use. The AI's ability to mimic various writing styles and tones was a cherry on top of an already exceptional dish.

- An AI chatbot powered by GPT was another advancement brought about by OpenAI. GPT-3 offered personalized user interactions and could properly maintain context in a conversation.

- GPT-3's coding capabilities marked the rise of a whole new field where AI could make itself useful. Developers could come up with simple prompts and expect the program to deliver functions and code snippets in different programming languages.

- Medical research was yet another direction of the GPT expansion. The technology began to analyze research papers and produce medical reports. That was also the time when AI tried on an even more important role: it made its first step in suggesting treatment scenarios.

All these developments led to a deeper understanding of how impactful GPT in particular and AI in general will become in the future. Yet new concerns started to surface. Training and fine-tuning LLMs demanded exceptional computational resources, which led to major energy consumption and carbon footprint. The need for sustainable practices in the field of the AI research and development has become evident. OpenAI took a series of actions to alleviate the situation, but the conversation remains open.

GPT-3.5 and ChatGPT

In early 2022, OpenAI finished training GPT-3.5. This refined iteration of GPT-3 was, too, trained on 570+ GB of various data and had the same number of parameters. A significant step forward and a major difference from GPT-3 is that GPT-3.5 was improved via Reinforcement Learning with Human Feedback (RLHF).

The technique does exactly what it says, i.e., helps align outputs that the engine produces with the human value system. This allowed the model to generate responses that were not only correct, but also matched what we'd call “a human angle”. E.g., when someone tells you a joke, you can tell if it's funny. But how can a machine identify that? That's where the RLHF steps forward and aligns Math terms with human nature. This major improvement made it possible for OpenAI to use GPT-3.5 for its renowned invention, the ChatGPT platform. Launched in November 2022, this specialized application of the most recent model was designed to generate conversational responses in a chat format. Needless to say, ChatGPT has reshaped the way people interact with AI worldwide, both for personal and business use. According to the source, it took five days (!) for ChatGPT to reach one million users and two months to reach 100 million. On average, the application generates 1.7 billion site views every month.

GPT-4

In March 2023 OpenAI released GPT-4. It was trained on more data and had more parameters (the numbers behind “more” were not made public by the company, the same goes for the architecture). In addition, this technology can understand not only text but also objects in an image. OpenAI made that happen by employing dual-stream transformers. They enabled the simultaneous processing of textual and visual information, like diagrams, infographics, etc. GPT-4 could also dive deep into human intent, context, and nuances better than ever before, and became a true polyglot with the ability to generate text in a number of languages. The new model signified the beginning of a major era of the conversational AI dominance.

Let's take a look at a few other achievements by GPT-4. It can:

- Follow user intention, e.g., complete sentences and predict alternatives for insufficient inputs;

- Write code in different programming languages;

- Produce lengthy conversational texts for emails and clauses for legal documents;

- Change behavior depending on user request;

- Search the Internet in real-time;

- Generate content that is less likely to be inaccurate or offensive.

In May 2024, OpenAI launched GPT-4o, which marked “a step towards much more natural human-computer interaction”. Its inputs and outputs might consist of any combination of text, audio, image, and video. Notably, GPT-4o can “respond to audio inputs in as little as 232 milliseconds, with an average of 320 milliseconds, which is similar to human response time in a conversation.” (OpenAI)

For a brief overview of the GPT evolution, take a look at the comparison table below:

Model

Achievements

Limitations

GPT-1

Could generate contextually relevant short texts.

With only 117 million parameters, GPT-1 had trouble with larger language structures. It couldn’t be applied for many tasks, and outputs were often factually incorrect.

GPT-2

Could generate longer and more diverse texts due to 1.5 billion parameters and more extensive training. Could handle different tasks, like text summaries, answering questions, and translation.

Could hallucinate facts and be biased. Its text generation abilities birthed ethical concerns. There were disputes about the misuse of the technology and creation of misleading content.

GPT-3

Due to 175 billion parameters, the model could perform various tasks, from content generation and customer support to medical research and coding. Its NLP capabilities made it possible to confuse GPT-3 with a real human. Anyone could directly interact with the LLM and use it for both personal and business purposes.

A strong need for sustainable practices in the field of AI research and development was discovered. Training and fine-tuning LLMs requires exceptional computational resources. That leads to major energy consumption and carbon footprint.

GPT-3.5

All that GPT-3 offered, but improved with the RLHF. Served as a foundation for ChatGPT.

ChatGPT could provide factually incorrect information, struggle with exploring the subject of a user query on a deeper level, and generate a considerable amount of repetitive, irrelevant information.

GPT-4

Became more reliable by producing significantly fewer inaccurate, irrelevant outputs. Could be better fine-tuned for specific tasks across a larger variety of industries. Decreased resource consumption, more accessible subscription models.

Still might hallucinate facts and make errors in reasoning.

OpenAI Today

In an interview to Latent Space, David Luan, whose team during his time at OpenAI ended up making GPT 1/2/3, said, “You know, every day we were scaling up GPT-3, I would wake up and just be stressed. And I was stressed because, you know, you just look at the facts, right? Google has all this compute. Google has all the people who invented all of these underlying technologies […] And it turned out the whole time that they just couldn't get critical mass […] We were able to beat them simply because we took big swings, and we focused.” It’s peculiar how things played out, isn’t it?

Undoubtedly, OpenAI’s AI journey hasn’t all been smooth sailing. In 2024, the company is evidently facing some internal turmoil, as it is experiencing a major talent exodus. It was particularly shocking for the global AI community when Mira Murati, OpenAI’s CTO since 2018, stepped down from her role to “do her own exploration”. As of 2024, out of 11 co-founders, only three people remain, and that does rise questions. It seems that OpenAI is standing on the brink of AGI [artificial general intelligence]. Then why are major players leaving? There are opinions that it might be related to the fact that the company prioritizes new product launches over safety. As Futurism reports, the exit of Ilya Sutskever who used to manage OpenAI's "Superalignment" AI safety team in May 2024 led to the complete dissolving of the team by the company. A former team member shared that his own departure was due to “realizing that it [OpenAI] was pursuing profit at the cost of safety.”

The time will surely reveal more about the sudden departures. Still, regardless of what comes next, OpenAI’s fundamental contribution to modern AI speaks for itself. The world as we knew it before GPT is gone now, we’re actively exploring new possibilities that the technology brings. Banking and finance, sales and marketing, retail, healthcare, programming, hospitality — the list of industries that benefit from the OpenAI’s invention goes on. Duolingo that uses ChatGPT to promote better and personalized digital education to its users, Indeed that partnered up with OpenAI to deliver contextual job matching, and many other businesses agree that the value GPT brings to the table is awe-inspiring.

Want to find out more about how GPT is applied across companies and industries? Check out our latest article to uncover the subject.

OpenAI vs… Meta?

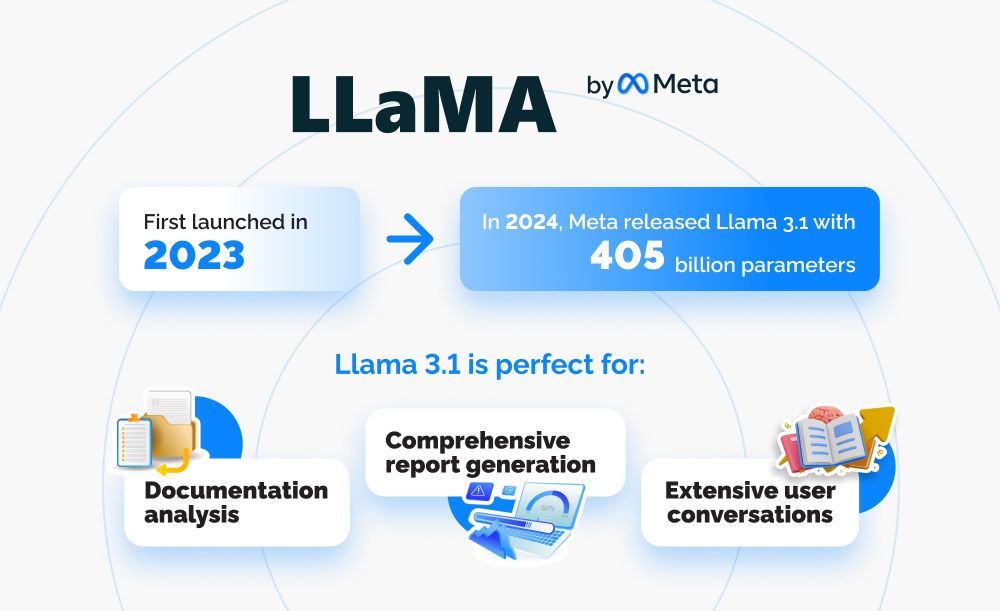

Of course, OpenAI is not the only participant of the AI race. Quite surprisingly, the company who decided to board the AI express is Meta. Mark Zuckerberg noted that their technology, an LLM Llama, first launched in 2023, is a solid rival to both of GPT’s parents, i.e., OpenAI and Google. In fact, that was Meta’s intention — to challenge the dominance of GPT-4 and Gemini.

Llama 3.1 launched in 2024 has 405 billion parameters. That is not the same as GPT-4’s 1.76 trillion parameters, but it is more than enough to handle complex language tasks. It also offers good support for non-English languages. Llama 3.1 is particularly well-suited for documentation analysis, comprehensive report generation, and extensive conversations. And while these achievements of Meta don’t sound like anything special in comparison to GPT-4, Llama’s major and the most significant difference from the OpenAI technology is that Llama is open-source. And the extended accessibility of advanced AI to a wider audience that it brings might disrupt the OpenAI business model.

As The New York Times writes, “Roughly 10 million ChatGPT users pay the company a $20 monthly fee, according to the documents. OpenAI expects to raise that price by $2 by the end of the year [2024], and will aggressively raise it to $44 over the next five years, the documents said […] OpenAI predicts its revenue will hit $100 billion in 2029, which would roughly match the current annual sales of Nestlé or Target.” And while the revenue predictions sound nice, the need to potentially pay $44 for the subscription every month does not. And here comes Llama: this language processing model has free access. As Meta states, their technology can be used free of charge by anybody, researchers and businesses included, even if it’s for commercial use. Zuckerberg expressed his hopes that the open-access strategy will contribute to the successful launch of other startups and products, “giving Meta greater sway in how the industry moves forward.” (The Los Angeles Times). Additionally, unlike OpenAI or Google, most of Meta’s revenue streams run from social media and targeted advertising. Hence, their refusal to see their AI advancements as a primary source of income seem to be beneficial to the steadiness of their financial state of affairs.

So far, Llama has already been successfully leveraged by Goldman Sachs, AT&T, Spotify, Accenture, and a bunch of other companies. As Meta writes, “Llama models are approaching 350 million downloads on Hugging Face to date — an over 10x increase from where we were about a year ago.” To us, it definitely looks like OpenAI is facing a new rival.

The Big Game Continues

The AI development road is bumpy. What does it take to make it through, and when exactly can you say that you’ve made it? In response to the first question, judging by what Google and OpenAI were up against, it looks like taking risks and being prepared to fail is a must. But how to respond to the second one? It feels like you should be somewhere near OpenAI to voice that, in addition to having inspired Mark Zuckerberg to launch a product to challenge you.

Surely, there still are hurdles that OpenAI has to overcome, and safety concerns seem to hold a strong position in the list. But if progress were fast and easy, it wouldn’t be so exciting to watch it happen, would it? So let’s stay tuned for whatever OpenAI is cooking and not forget that the AI race is a race. Who knows what new fascinating contestants tomorrow will bring?

Interested in building your own AI product? Qulix has profound expertise in the field and knows how to make GPT deliver its best. Contact us to discuss your next smart solution.

Contacts

Feel free to get in touch with us! Use this contact form for an ASAP response.

Call us at +44 151 528 8015

E-mail us at request@qulix.com